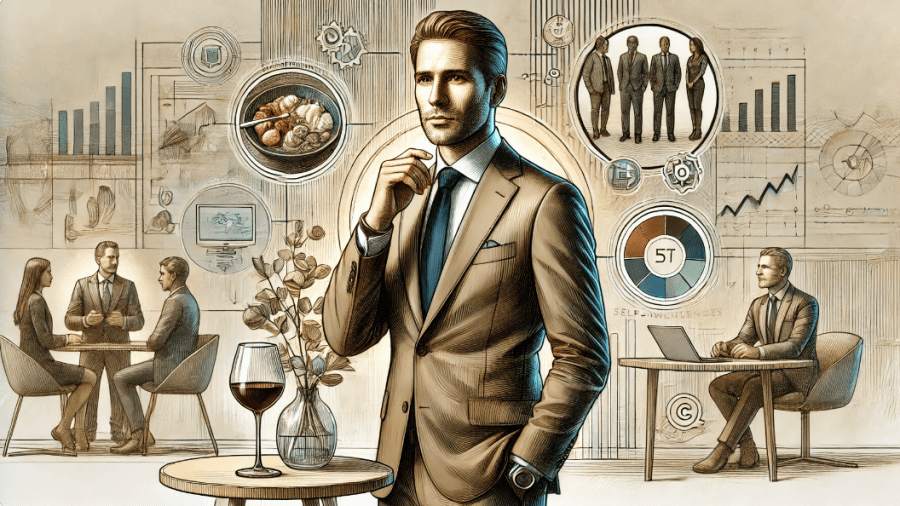

In a recent social media trend, people are asking AI tools to draw or describe what their lives might look like, based solely on a few data points they’ve previously shared. This may sound like an innocuous and fun way to get a new perspective, but it actually reveals something a bit deeper and perhaps unsettling about the era we live in—just how much our online presence can reveal about us, even to publicly accessible AI tools.

I recently tried the prompt, “Based on what you know of me, draw a picture of what you think my life currently looks like,” with a public AI model. What I got back was a strikingly accurate visualization of my life as it currently stands. It captured not only my professional challenges and current ambitions but even hinted at aspects of my personal life and interests. It felt as though the AI had peered beyond the screen and into my daily life. The experience got me thinking: if a public AI can produce such an accurate rendering of my life, what does that mean about the vast amounts of data corporations or governments might have on each of us?

The Data We Leave Behind

Our digital lives leave traces of our personalities, interests, and even our emotional states. When we post a picture, like a video, or update our profiles, we add to a complex digital profile that AI systems can later reference to predict behavior, preferences, and life situations. In my case, the AI had access to details I’d shared over time—my background, my job challenges, and my interests—and combined these fragments into a surprisingly accurate portrayal of my life.

The Power and Risks of AI Pattern Recognition

AI algorithms work by finding patterns in large amounts of data. Given a few inputs, they can draw highly specific conclusions, as happened with my own digital “portrait.” When public AI tools can access even general information, they can still make deeply personal inferences. Imagine the possibilities with private entities or governments with unrestricted access to our private data: credit card transactions, location history, health records, browsing habits, and social connections.

Unlike the publicly available AI models that have only our online personas, private entities may use non-consensual data collection through third-party agreements or back-end tracking technologies to create far more extensive profiles. Governments and corporations could potentially track us on an almost cellular level: knowing not just our preferences but our routines, psychological triggers, and even potentially predicting our future behavior based on past data. In the wrong hands, these predictions could be used to manipulate consumer choices, predict and shape social trends, or even influence voter behavior on a massive scale.

AI Portraits as a Reality Check

As fun and harmless as it may seem to play with these AI portrait prompts, the exercise underscores just how much can be gleaned from a few data points. And if a public model can analyze these to paint a life portrait, private models—designed to optimize profit or compliance, rather than delight—can achieve much more.

While AI technology can offer us personalized, convenient experiences, it’s crucial for each of us to remain conscious of the digital traces we leave behind. We must also advocate for stronger data privacy laws and demand transparency from both public and private entities on how our data is collected, stored, and used.

As we experiment with AI prompts and digital tools, we should treat them as reminders to manage our digital footprint thoughtfully, remembering that the sum of our data is more powerful than it seems. AI’s capacity to capture personal nuances from fragmented data is a mirror held up to our data-rich lives—a reminder of the importance of safeguarding our digital identity in a world that has the capability, and sometimes the incentive, to know us better than we know ourselves.